I have a four-host vSAN cluster running vSAN 6.2. Recently the vSAN health’s Performance service check shows two of the hosts not contributing stats.

The following are all the steps that I tried during troubleshooting and ultimately fixing the issue in my environment. Some of the steps do not fix my issue, however they may be applicable to your situation. PS. I opened a VMware support case on this issue. The support engineer did not directly solve my issue. However, he did give the hint on the cause of the issue that led me to discover the solution.

- Turn off and turn on the Performance Services in vSphere web client, vSAN cluster, Manage, Settings, Health and Performance.

- Turn off the Performance Services, restart the vSAN management agent “/etc/init.d/vsanmgmtd restart”, then restart the service.

- Place the vSAN host in the maintenance mode and restart the host.

- SSH to the vCenter server appliance, restart the vmware-vpxd service “service vmware-vpxd restart”.

- Verify the vSAN storage provider status of each vSAN host is online in vSphere web client, vCenter server, Manage, Storage Providers. If the host’s vSAN provider is offline, unregister the host’s storage provider and synchronize all vSAN storage providers. This brings the host’s vSAN storage provider back online.

Caution: doing this can cause the VMs on the host to failover to other hosts in the cluster. - (I think this is to begin to lead me to the ultimate fix) Check the certificate info of each vSAN host in Storage Provider. They should be issued by the same Platform Service Controller (my vCenter is the vCSA wit the external PSC, instead of the embedded PSC). In my case, the certificate of the two “problem” vSAN hosts is issued by the VC host; the certificate of the “good” vSAN hosts is issued by the PSC host. I don’t know what the cause of these hosts having different certificate issuers, since I don’t have the history of how these PSC and VC were deployed.

- To further confirm the ESXi host certificate is the problem

- Login vCenter server as “administrator@vsphere.local’

- Home, Administration, Deployment, System Configuration, Nodes, PSC node, Manage, Certificate Authority (if selecting VC node, there is no Certificate Authority tab under Manage)

- Enter the password of “administrator@vsphere.local” again

- Active Certificate, all the ESXi hosts are listed, except the two “problem” vSAN hosts

- It makes sense why the certificates of the two “problem” vSAN hosts are missing here, because they are issued by the VC host, not the PSC host. But it does not make sense how they received the “problem” certificate since there is no Certificate Authority on the VC host.

- Once the cause is identified, the fix is to re-issue the certificate to the two “problem” vSAN hosts.

- In vSphere web client, the “problem” vSAN host, Manage, Settings, Certificate

- Here is also showed the host certificate issuing by the wrong host (the VC host)

- Click Renew to request a new certificate

- Caution: Once clicking the Renew button, the host HA agent was restarted. Some VMs on the host failed over to the remaining hosts, even the VMs seem no downtime.

Before renewing the certificate

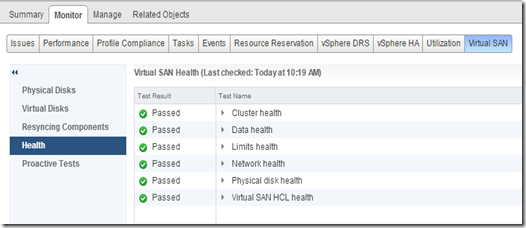

After renewing the certificate - Once the host certificates are re-issued by the PSC, the vSAN Performance service status is showed “Passed”

Conclusion

- The cause of the vSAN Performance service “Host Not Contributing Stats” in my case is the “problem” vSAN host having the wrong host certificate.

- I don’t know how these “problem” hosts received the wrong host certificate.

- When the vCSA with the external PSC, the host certificate is issued by the PSC host.

- Re-issuing or renewing the host certificate will restart the host HA agent. It can cause the VMs on the host migrating to other hosts.