Source: Configuring Modes of Packet Forwarding

Search This Blog

NetScaler Topologies Comparison

Source: Understanding Common Network Topolgies

| Topology | One-Arm | |||||||||||

| Single Subnet | Multiple Subnets | |||||||||||

| Client/Server IP | on the same subnet | on the different subnets | ||||||||||

| VIP | on the NetScaler | on the NetScaler | ||||||||||

| SNIP | n/a | private subnet | ||||||||||

| NSIP | public subnet | private subnet | ||||||||||

| MIP | public subnet | n/a | ||||||||||

| Server IP | public subnet | private subnet | ||||||||||

| Layer 2 Mode | n/a | n/a | ||||||||||

| Use SNIP Option | n/a | must enable | ||||||||||

| Others | connect one of the NICs to switch | connect one of the NICs to switch | ||||||||||

| Diagram |

|

| ||||||||||

| Task Overiew | Task overview: To deploy a NetScaler in one-arm mode with a single subnet 1. Configure the NSIP, MIP, and the default gateway, as described in "Configuring the NetScaler IP Address (NSIP)". 2. Configure the virtual server and the services, as described in "Creating a Virtual Server" and "Configuring Services". 3. Connect one of the network interfaces to the switch. | Task overview: To deploy a NetScaler appliance in one-arm mode with multiple subnets 1. Configure the NSIP and the default gateway, as described in "Configuring the NetScaler IP Address (NSIP)". 2. Configure the SNIP and enable the USNIP option, as described in "Configuring Subnet IP Addresses". 3. Configure the virtual server and the services, as described in "Creating a Virtual Server" and "Configuring Services". 4. Connect one of the network interfaces to the switch. | ||||||||||

NetScaler Topologies Comparison

Source: Understanding Common Network Topolgies

| Topology | Two-Arm (inline) | One-Arm | ||||||||||||||||||||||

| Multiple Subnets | Transparent | Single Subnet | Multiple Subnets | |||||||||||||||||||||

| Client/Server IP | on the different subnets | on the same subnet | on the same subnet | on the different subnets | ||||||||||||||||||||

| VIP | public subnet | no VIP | on the NetScaler | on the NetScaler | ||||||||||||||||||||

| SNIP | private subnet | n/a | n/a | private subnet | ||||||||||||||||||||

| NSIP | private subnet | public subnet | public subnet | private subnet | ||||||||||||||||||||

| MIP | n/a | public subnet | public subnet | n/a | ||||||||||||||||||||

| Server IP | private subnet | public subnet, configure the default gateway as the MIP | public subnet | private subnet | ||||||||||||||||||||

| Layer 2 Mode | n/a | must enable | n/a | n/a | ||||||||||||||||||||

| Use SNIP Option | must enable | n/a | n/a | must enable | ||||||||||||||||||||

| Others | the most commonly used topology | if the clients need to access the servers directly NetScaler is placed between the client and the server | connect one of the NICs to switch | connect one of the NICs to switch | ||||||||||||||||||||

| Diagram |

|

|

|

| ||||||||||||||||||||

Extend a Linux LVM Volume on a VM - Part 3

This is the part 3 of extending a Linux LVM volume. see part 1 and part 2.

This part 3 is similar to part 2 when the partition as a PV. Instead of creating a new partition on the free disk space (like in part 2), delete the last partition on the disk and recreate it. This is useful when all the primary partitions (1 - 4) are already in use.

Extend a LVM when the partition as a PV

-

Increase the VM’s hard disk size in vSphere Client

- If there is a VM snapshot on the disk, its size cannot be changed. Remove all the snapshots first

- After increasing the disk size, take a snapshot as the backup

- Rescan the SCSI bus to verify the OS see the new space on the disk

- ls /sys/class/scsi_host/

- echo “- - -“ > /sys/class/scsci_host/<host_name>/scan

- tail -f /var/log/message

- or

- ls /sys/class/scsi_disk/

- each ‘1’ > /sys/class/scsi_disk/<0\:0\:0\:0>/device/rescan

- tail -f /var/log/message

- fdisk -l

- Prepare the disk partition

- fdisk -l

- fdisk </dev/sdb>

- p - print the partition table, note the last partition number in use

- d - delete parition

- p - primary partition

- <X> - partition number, enter the last partition number from the previous p - print the partition table command

- n - add a new partition

- p - primary partition

- <X> - partition number, enter the partition number was deleted in the previous step

- default - the begining of the cylinder in the original partition

- default - the last of the free cylinder

- t - change a partition’s system id

- <X> - partition number, enter the partition number was recreated in the previous step

- 8e - Linux LVM

- w - write table to disk and exit

- fdisk -l to verify the new partition size

- Update partition table changes to kernel

- reboot

- or partprobe </dev/sdb>

- Update (04/18/2016): In RHEL 6, partprobe will only trigger the OS to update the partitions on a disk that none of its partitions are in use (e.g. mounted). If any partition on a disk is in use, partprobe will not trigger the OS to update partition in the system because it it considered unsafe in some situations. So a reboot is required. see “How to use a new partition in RHEL6 without reboot?”

- Resize the PV

- pvresize </dev/sdb3>

- Verifty the VG automatically sees the new space

- vgs

- Extend the LV

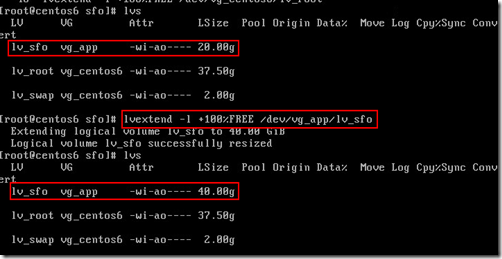

- lvextend -l +100%FREE</dev/volume_group_name>/<logical_volume_name>

- or lvextend -L+<size> /dev/<volume_group_name>/<logical_volume_name>

- lvs

- Resize the file system

- resize2fs /dev/<volume_group_name>/<logical_volume_name>

- df -h

- Remove the VM snapshot once confirming the data intact

Extend a Linux LVM Volume on a VM - Part 2

This is the part 2 of extending a Linux LVM volume. See part 1 when the entire disk as a PV.

Extend a LVM when the partition as a PV

-

Increase the VM’s hard disk size in vSphere Client

- If there is a VM snapshot on the disk, its size cannot be changed. Remove all the snapshots first

- After increasing the disk size, take a snapshot as the backup

- Rescan the SCSI bus to verify the OS see the new space on the disk

- ls /sys/class/scsi_host/

- echo “- - -“ > /sys/class/scsci_host/<host_name>/scan

- tail -f /var/log/message

- or

- ls /sys/class/scsi_disk/

- each ‘1’ > /sys/class/scsi_disk/<0\:0\:0\:0>/device/rescan

- tail -f /var/log/message

- fdisk -l

- Prepare the disk partition

- fdisk -l

- fdisk </dev/sdb>

- p - print the partition table, note the next available partition number

- n - add a new parition

- p - primary partition

- <X> - partition number, enter the next available partition number from the previous p - print the partition table command

- default - the begining of the free cylinder

- default - the last of the free cylinder

- t - change a partition’s system id

- <X> - partition number, enter the next available partition number from the previous p - print the partition table command

- 8e - Linux LVM

- w - write table to disk and exit

- fdisk -l to verify the new partition

- Update partition table changes to kernel

- reboot

- or partprobe </dev/sdb>

- Update (04/18/2016): In RHEL 6, partprobe will only trigger the OS to update the partitions on a disk that none of its partitions are in use (e.g. mounted). If any partition on a disk is in use, partprobe will not trigger the OS to update partition in the system because it it considered unsafe in some situations. So a reboot is required. see “How to use a new partition in RHEL6 without reboot?”

- Initializ the disk partition

- pvcreate </dev/sdb3>

- Extend the VG

- use vgdispaly to determine the volume group name

- vgextend <volume_group_name> </dev/sdb3>

- vgs

- Extend the LV

- lvextend -l +100%FREE</dev/volume_group_name>/<logical_volume_name>

- or lvextend -L+<size> /dev/<volume_group_name>/<logical_volume_name>

- lvs

- Resize the file system

- resize2fs /dev/<volume_group_name>/<logical_volume_name>

- df -h

- Remove the VM snapshot once confirming the data intact

Extend a Linux LVM Volume on a VM - Part 1

As I mentioned in the recent Linux LVM post, there are two ways to prepare the physical volume (PV)

- the entire disk as a PV (not recommended)

- or creating a partition on the disk and the partition as a PV.

The step to extend a LVM volume are different on these two configuration.

Extend a LVM when the entire disk as a PV

- Increase the VM’s hard disk size in vSphere Client

- If there is a VM snapshot on the disk, its size cannot be changed. Remove all the snapshots first

- After increasing the disk size, take a snapshot as the backup

- Rescan the SCSI bus to verify the OS see the new space on the disk

- ls /sys/class/scsi_host/

- echo “- - -“ > /sys/class/scsci_host/<host_name>/scan

- tail -f /var/log/message

- or

- ls /sys/class/scsi_disk/

- each ‘1’ > /sys/class/scsi_disk/<0\:0\:0\:0>/device/rescan

- tail -f /var/log/message

- fdisk -l

- Resize the PV

- Verify the VG automatically sees the new space

- Extend the LV

- Resize the file system

- Remove the VM snapshot once confirming the data intact

Linux Logical Volume Management (LVM) and Setup

LVM Layout

(source: RedHat Logical Volume Manager Administration)

LVM Components (from bottom to top)

- Hard Disks

- Partitions

- LVM will work fine with the entire disk (without creating a partition) as a PV. But this is not recommended.

- Other OS or disk utility (e.g. fdisk) will not recognize the LVM metadata and display the disk as free, so the disk is likely being overwritten by mistake.

- The best pratice is to create a partition on the hard disk, then initialize the partition as a PV.

- It is generally recommended that creating a single partition that covers the whole disk. (see RedHat Logical Volumen Manager Administration)

- Using an entire disk a PV or using a partition as a PV will have a different procedure when growing the hard disk size in the VM (see “Expanding LVM Storage”)

- Physical Volumnes

- Volume Group

- Logical Volumes

- File Systems

LVM Setup

- Add a new hard disk

- Rescan the SCSI bus

- ls /sys/class/scsi_host/

- echo “- - -“ > /sys/class/scsci_host/<host_name>/scan

- tail -f /var/log/message

- or

- ls /sys/class/scsi_disk/

- each ‘1’ > /sys/class/scsi_disk/<0\:0\:0\:0>/device/rescan

- tail -f /var/log/message

- Prepare the disk partition

- fdisk -l

- fdisk </dev/sdb>

- n - add a new parition

- p - primary partition

- 1 - partition number

- default - first cylinder

- default - last cylinder

- t - change a partition’s system id

- 1 - partition number

- 8e - Linux LVM

- w - write table to disk and exit

- fdisk -l to verify the new partition

- Update partition table changes to kernel

- reboot

- or partprobe </dev/sdb>

- Update (04/18/2016): In RHEL 6, partprobe will only trigger the OS to update the partitions on a disk that none of its partitions are in use (e.g. mounted). If any partition on a disk is in use, partprobe will not trigger the OS to update partition in the system because it it considered unsafe in some situations. So a reboot is required. see “How to use a new partition in RHEL6 without reboot?”

- Initialize disks or disk partitions

- pvcreate </dev/sdb> - skip step #3, use the entire disk as a PV, not recommended

- pvcreate </dev/sdb1> - use the partition created in step #3 as a PV, best practice

- pvdisplay

- pvs

- Create a volume group

- vgcreate <volume_group_name> </dev/sdb1>

- vgdisplay

- vgs

- Create a logical volume

- lvcreate --name <logical_volume_name> --size <size> <volume_group_name>

- or lvcreate -n <logical_volume_name> -L <size> <volume_group_name>

- lvdisplay

- lvs

- Create the file system on the logical volume

- mkfs.ext4 /dev/<volume_group_name>/<logical_volume_name>

- Mount the new volume

- mkdir </mount_point>

- mount /dev/<volume_group_name>/<logical_volume_name> </mount_point>

- Add the new mount point in /etc/fstab

- vi /etc/fstab

- /dev/<volume_group_name>/<logical_volume_name> </mount_point> ext4 defaults 0 0

Use WinSCP to Transfer Files in vCSA 6.7

This is a quick update on my previous post “ Use WinSCP to Transfer Files in vCSA 6.5 ”. When I try the same SFTP server setting in vCSA 6.7...

-

Recently, we created a new child domain in the existing AD forest with two new Windows Server 2012 R2 domain controllers. The AD authenticat...

-

Sometimes a Windows server is assigned to the incorrect network profile. It can cause applying the wrong Windows Firewall rules. Here is how...

-

Updated on 07/13/2016. See the update this post, I might find the ultimate solution, even I am still not sure what the cause of the issue. ...

![clip_image002[6] clip_image002[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhS1fUNfO6rgvKmqpXpNSMkcHQFm378lK8MjReC0m-ssE7WqFnX_PwgdcST97oPFdD9kDjwZMuxujdnkWojpHENXMI7Rqv1Qyv7o-EyGmqAElFI1soOAtdIynPApTLfxMhbRdOQm7aB_vk/?imgmax=800)

![clip_image004[6] clip_image004[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgVJ8WdiS1EYTWW5Qn9iSVCdkLwXFuNiMqCC2PV9GyVCvPkzD6Q2GYwYv6b_gjDZatWNw9Mh1GbfSBhN5cGE_REqQ3frqtXWtE7r4XBOZfpiejONmFYdd7YHpk5XKKOkyGqQVYM2IQuUE8/?imgmax=800)

![clip_image002[8] clip_image002[8]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgoqZka_ExsHkVt_M0GIwvU21To-grAmJtykNeH60PR8yYKx8XDC3AokMb0u4bJGFeWy_kZAcXqFe-a22aAMK8It0mhoJPE6hgVBvHR_WZQm74aPw_FYZ_UMQzwpwfV5kL4cbXc8Xj-p54/?imgmax=800)

![clip_image004[8] clip_image004[8]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjbN50Ng7mx-vvADwG_616ODvH9V0CW1TP1ldcM9l0iOLV_1e7dZaeLR5vMqs_1pucyeh2Ds-wykcO-4z4rjGXxWlJIylnyjI5oAsCRK9rHS97daYTE4y7M0iiE6pMqFNbsw5bcM4rbSHg/?imgmax=800)