When searching an issue on expanding a shared disk on Microsoft clustering VMs (CIB), I have learned more about the vmkfstools command.

The vmkfstools --help displays many options, but lack of explanation. So I document them here. (reference: vSphere Storage, Using vmkfstools)

# vmkfstools --help

OPTIONS FOR FILE SYSTEMS:

vmkfstools -C --createfs [vmfs3|vmfs5]

-b --blocksize #[mMkK]

-S --setfsname fsName

-Z --spanfs span-partition

-G --growfs grown-partition

deviceName

-P --queryfs -h --humanreadable

-T --upgradevmfs

vmfsPath

-y --reclaimBlocks vmfsPath [--reclaimBlocksUnit #blocks]

OPTIONS FOR VIRTUAL DISKS:

vmkfstools -c --createvirtualdisk #[gGmMkK]

-d --diskformat [zeroedthick

|thin

|eagerzeroedthick

]

-a --adaptertype [buslogic|lsilogic|ide

|lsisas|pvscsi]

-W --objecttype [file|vsan]

--policyFile <fileName>

-w --writezeros

-j --inflatedisk

-k --eagerzero

-K --punchzero

-U --deletevirtualdisk

-E --renamevirtualdisk srcDisk

-i --clonevirtualdisk srcDisk

-d --diskformat [zeroedthick

|thin

|eagerzeroedthick

|rdm:<device>|rdmp:<device>

|2gbsparse]

-W --object [file|vsan]

--policyFile <fileName>

-N --avoidnativeclone

-X --extendvirtualdisk #[gGmMkK]

[-d --diskformat eagerzeroedthick]

-M --migratevirtualdisk

-r --createrdm /vmfs/devices/disks/...

-q --queryrdm

-z --createrdmpassthru /vmfs/devices/disks/...

-v --verbose #

-g --geometry

-x --fix [check|repair]

-e --chainConsistent

-Q --objecttype name/value pair

--uniqueblocks childDisk

vmfsPath

OPTIONS FOR DEVICES:

-L --lock [reserve|release|lunreset|targetreset|busreset|readkeys|readresv

] /vmfs/devices/disks/...

-B --breaklock /vmfs/devices/disks/...

vmkfstools -H --help

vmkfstools Command Syntax

vmkfstools options target

Options: separate into three types - File System Options, Virtual Disk Options, and Storage Device Options.

Target: partition, device, or path

File System Options

- Listing Attributes of a VMFS Volume

The listed attributes include the file system label, if any, the number of extents comprising the specified VMFS volume, the UUID, and a listing of the device names where each extent resides.

vmkfstools -P -h <vmfsVolumePath>

vmkfstools -P -h /vmfs/volumes/netapp_sata_nfs1/ - Creating a VMFS Datastore

vmkfstools -C vmfs5 -b <blocksize> -S <datastoreName> <partitionName>

vmkfstools -C vmfs5 -b 1m -S my_vmfs /vmfs/devices/disks/naa.ID:1 - Extending an Existing VMFS Volume

vmkfstools -Z <span_partition> <head_partition>

vmkfstools -Z /vmfs/devices/disks/naa.disk_ID_2:1 /vmfs/devices/disks/naa.disk_ID_1:1

Caution: When you run this option, you lose all data that previously existed on the SCSI device you specified in span_partition. - Growing an Existing Extent

vmkfstools –G device device

vmkfstools --growfs /vmfs/devices/disks/disk_ID:1 /vmfs/devices/disks/disk_ID:1

Virtual Disk Options

- Creating a Virtual Disk

vmkfstools -c <size> -d <diskformat> <vmdkFile>

vmkfstools -c 2048m testdisk1.vmdk - Initializing a Virtual Disk

vmkfstools -w <vmdkFile>

This option cleans the virtual disk by writing zeros over all its data. Depending on the size of your virtual disk and the I/O bandwidth to the device hosting the virtual disk, completing this command might take a long time.

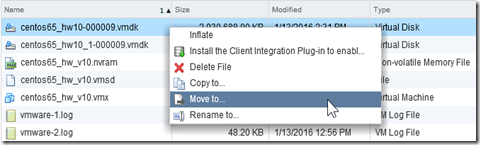

Caution: When you use this command, you lose any existing data on the virtual disk. - Inflating a Thin Virtual Disk

vmkfstools -j <vmdkFile>

This option converts a thin virtual disk to eagerzeroedthick, preserving all existing data. The option allocates and zeroes out any blocks that are not already allocated. - Removing Zeroed Blocks (Converting a virtual disk to a thin disk)

vmkfstools -K <vmdkFile>

Use the vmkfstools command to convert any thin, zeroedthick, or eagerzeroedthick virtual disk to a thin disk with zeroed blocks removed.

This option deallocates all zeroed out blocks and leaves only those blocks that were allocated previously and contain valid data. The resulting virtual disk is in thin format. - Converting a Zeroedthick Virtual Disk to an Eagerzeroedthick Disk

vmkfstools -k <vmdkFile>

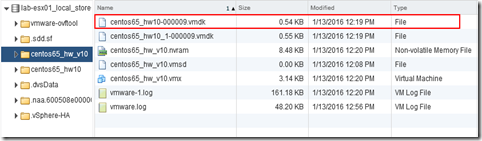

Use the vmkfstools command to convert any zeroedthick virtual disk to an eagerzeroedthick disk. While performing the conversion, this option preserves any data on the virtual disk. - Deleting a Virtual Disk

vmkfstools -U <vmdkFile>

This option deletes files associated with the virtual disk listed at the specified path on the VMFS volume. - Renaming a Virtual Disk

vmkfstools -E <oldName> <newName> - Cloning or Converting a Virtual Disk or Raw Disk

cloning: vmkfstools -i <sourceVmdkFile> <targetVmdkFile>

vmkfstools -i /vmfs/volumes/templates/gold-master.vmdk /vmfs/volumes/myVMFS/myOS.vmdk

converting: vmkfstools -i <sourceVmdkFile> -d <diskfomrat> <targetVmdkFile> - Extending a Virtual Disk

vmkfstools -X <newSize> [-d eagerzeroedthick] <vmdkFile>

use -d eagerzeroedthick to ensure the extended disk in eagerzeroedthick format.

Caution: do not extend the base disk of a virtual machine that has snapshots associated with it. If you do, you can no longer commit the snapshot or revert the base disk to its original size. - Displaying Virtual Disk Geometry

vmkfstools -g <vmdkFile>

The output is in the form: Geometry information C/H/S, where C represents the number of cylinders, H represents the number of heads, and S represents the number of sectors. -

Checking and Repairing Virtual Disks

vmkfstools -x <vmdkFile>

Use this option to check or repair a virtual disk in case of an unclean shutdown

Storage Device Options

- Managing SCSI Reservation of LUNs

Caution: Using the -L option can interrupt the operations of other servers on a SAN. Use the -L option only when troubleshooting clustering setups.- vmkfstools -L reserve <deviceName>

Reserves the specified LUN. After the reservation, only the server that reserved that LUN can access it. If other servers attempt to access that LUN, a reservation error results - vmkfstools -L release <deviceName>

Releases the reservation on the specified LUN. Other servers can access the LUN again - vmkfstools -L lunreset <deviceName>

Resets the specified LUN by clearing any reservation on the LUN and making the LUN available to all servers again. The reset does not affect any of the other LUNs on the device. If another LUN on the device is reserved, it remains reserved - vmkfstools -L targetreset <deviceName>

Resets the entire target. The reset clears any reservations on all the LUNs associated with that target and makes the LUNs available to all servers again. - vmkfstools -L busrest <deviceName>

Resets all accessible targets on the bus. The reset clears any reservation on all the LUNs accessible through the bus and makes them available to all servers agai - When entering the device parameter, use the following format:

/vmfs/devices/disks/vml.vml_ID:P

- vmkfstools -L reserve <deviceName>

Hidden Options (reference: “Some useful vmkfstools ‘hidden’ options”)

- VMDK Block Mappings

vmkfstools -t0 <vmdkFile>

Display the chuck file format in a VMDK file. - VMFS -- = eager zeroed thick

- VMFS Z- = lazy zeroed thick

- NOMP -- = thin